What are the best free tools to analyse log files and fix crawl waste?

Log files reveal how bots like Googlebot interact with your website. When search engines focus on broken links, outdated URLs or thin content, crawl budget gets wasted. These free tools help you identify crawl inefficiencies, review server request patterns, and focus crawling on pages that matter most.

Here's What We Have Covered In This Article

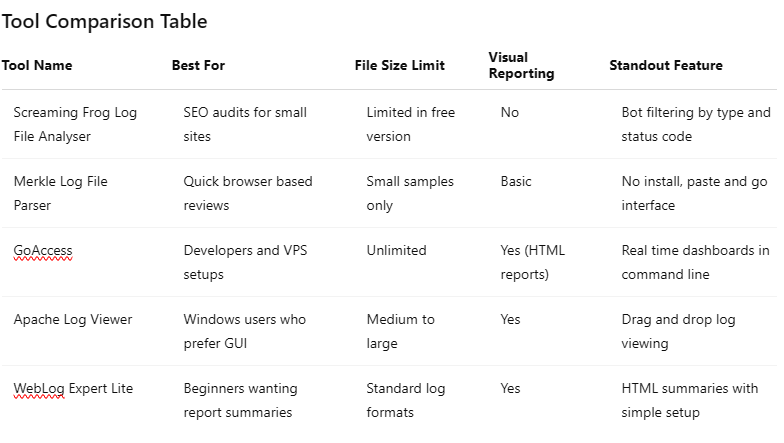

1. Screaming Frog Log File Analyser

This desktop tool lets you upload raw server logs and see which URLs Googlebot visits. You can find repeated crawls of less important pages and check how often key pages get accessed. It complements the Screaming Frog SEO Spider, giving you deeper crawl stats reporting.

Key Features

- Bot filters: Focus on Googlebot or other search crawlers

- Status code tracking: Highlight crawl frequency and responses

- Data export: Review logs in spreadsheets or reports

Limitations

- Free version: Limits number of rows

- Best for: Small to midsize log file SEO audits

This tool offers direct insight into real bot activity and can be used to diagnose crawl inefficiencies across various segments of your site.

2. Merkle’s TechnicalSEO.com Log File Parser

Merkle’s browser tool works with copy pasted server log snippets. It breaks them into crawl entries with URLs, timestamps and user agent details, helping beginners get started with access log reviews.

Key Features

- Interface: Paste and parse logs with no install

- Output: View response codes, URLs and crawl timing

- Privacy: Logs processed locally in your browser

Benefits

- Best for: Quick checks, beginners, nontechnical audits

- Drawback: Cannot process large log sets

This parser is ideal for testing smaller samples. It helps new SEOs explore crawl frequency trends and understand how bots behave differently across page types.

3. GoAccess: Command Line Visual Reporting

GoAccess is an open source log viewer that runs in the terminal. It converts server logs into live dashboards and interactive HTML reports. It works with both Apache and Nginx logs.

Key Features

- Real time HTML output: Crawl stats updated instantly

- Filtering: Drill down by bot, method and response

- Setup: Simple installation on most Linux servers

When to Use

- Best for: VPS setups, developers, Linux users

- Drawback: Requires terminal knowledge

Use GoAccess to identify crawl anomalies, wasted crawl effort, and user agent behaviour. It’s ideal for performing crawl efficiency audits on self hosted environments.

4. Apache Log Viewer for Windows

Apache Log Viewer gives Windows users an accessible interface for exploring server logs. It opens large files and sorts entries by status, date or HTTP method.

Key Features

- GUI navigation: Works like a typical Windows app

- Visual filters: Explore by response status or date

- Response timeline: Understand crawl timing and diagnostics

Limitations

- Platform: Windows only

- Best for: Desktop viewing and quick audits

This viewer works well for nontechnical users who want to detect recurring crawl behaviour, monitor crawl stats reports, and find soft 404s without coding knowledge.

5. WebLog Expert Lite

WebLog Expert Lite provides visual summaries of access logs. It supports standard log file formats from Apache and Nginx and is beginner friendly.

Key Features

- Report output: Creates HTML visual summaries

- Format support: Works with Apache and Nginx

- Interface: Easy to install and beginner friendly

Benefits

- Best for: New SEOs, light reporting needs

- Drawback: No live or real time features

Use this tool to understand server request patterns, track crawler visits, and build familiarity with bot behaviour analysis in a visual format.

Pro Tip : Paste a log snippet into Merkle’s parser monthly to catch hidden crawl issues early. It’s fast and doesn’t need technical access.

Improve Your Site’s Crawl Efficiency

Don’t let Google waste time on the wrong pages. We’ll audit your site’s crawl paths and show you where to fix.

What does crawl waste look like in a log file?

You can spot crawl waste by reviewing access logs for common inefficiencies:

- Repeated hits on redirected or outdated URLs

- Crawling of parameter heavy or filter driven pages

- Large volumes of 404 or soft 404 responses

- Frequent visits to thin content with low SEO value

These indicators point to wasted crawl budget and reveal missed opportunities to optimise your crawl diagnostics.

How to fix crawl waste after log analysis

Once crawl inefficiencies are identified, address them using structured actions:

- Block unnecessary URLs: Use robots.txt to limit non priority crawling

- Guide crawlers: Add high value pages to your XML sitemap

- Remove broken links: Redirect outdated or dead URLs

- Control indexing: Apply noindex to low value pages

- Strengthen internal links: Use descriptive anchor text to boost undercrawled content

Fixing these issues helps ensure crawl budget is spent on pages that improve visibility. You can compare this to what Search Console shows, but log files reveal deeper layers.

Pro Tip: If Googlebot ignores key pages, strengthen your internal links before touching anything else.

Need Help Reading Server Logs?

We turn complex log files into clear actions to improve your SEO. Let our technical team guide you.

FAQ: Log Files and Crawl Efficiency

What is crawl budget in SEO?

It is the number of pages a search engine will crawl from your site within a timeframe. A crawl efficiency audit ensures that budget is not wasted.

Can I analyse logs without server access?

Yes. Some hosts let you export logs, and tools like Merkle’s parser work with short snippets.

What is a soft 404?

It is a page that looks valid but offers no content, wasting bot time and resources.

How often should I check my logs?

Monthly is fine for most sites. High traffic platforms might benefit from weekly reviews.

Does this replace Google Search Console?

No. GSC shows trends and errors, but log files reveal real time bot behaviour and crawl depth that GSC misses.

Final thoughts: Start with what you can access

You do not need advanced skills or paid software to improve crawl efficiency. Each tool in this guide supports better crawl diagnostics and budget alignment.

Start small. Try a browser tool like Merkle for a quick crawl analysis. For deeper insights, use GoAccess or Screaming Frog to review crawl stats reports and identify missed opportunities.

Even small sites benefit when search engines prioritise important pages. Analysing logs regularly keeps your SEO focused and actionable.